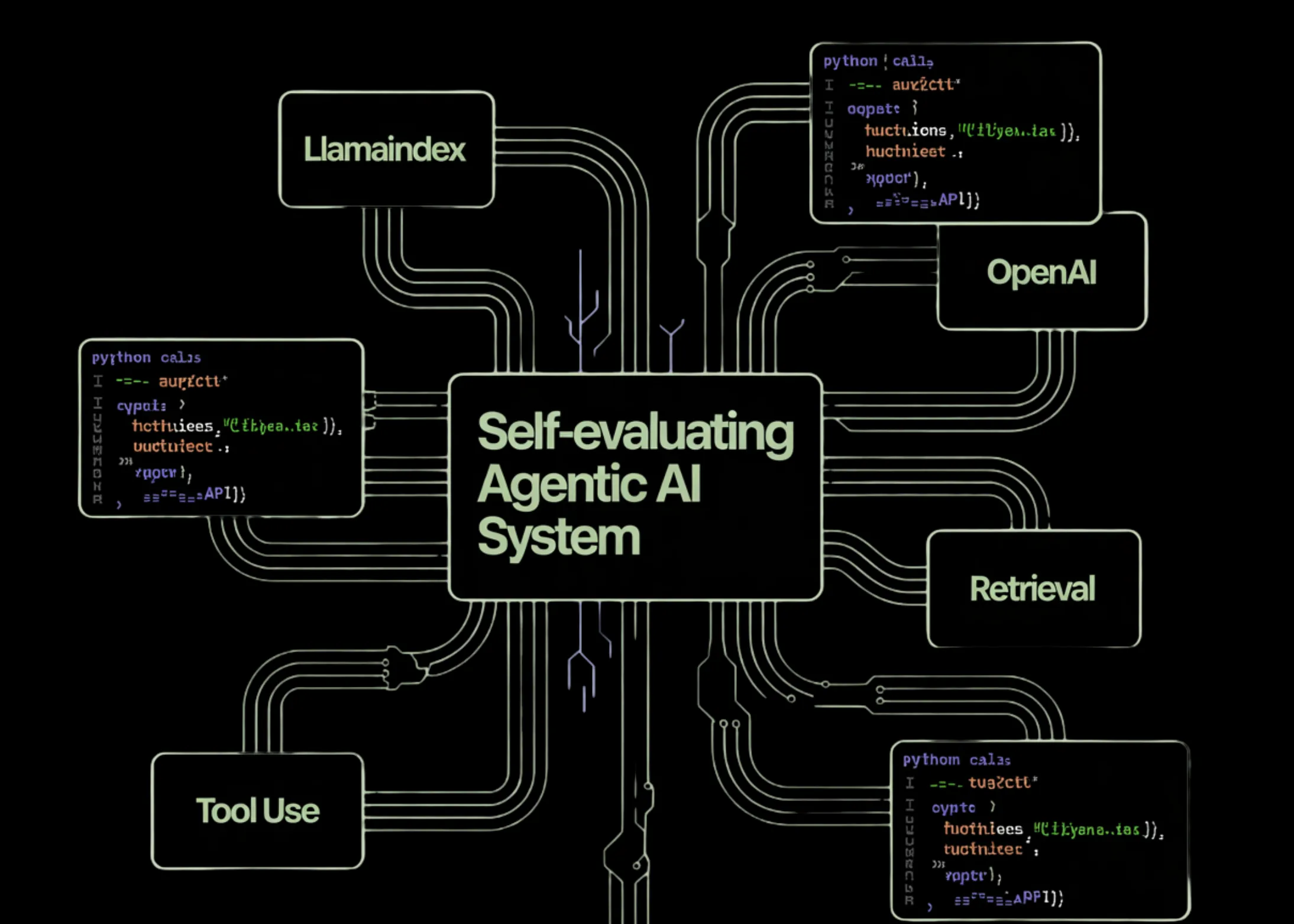

How to Build a Self-Evaluating Agentic AI System with LlamaIndex and OpenAI Using Retrieval, Tool Use, and Automated Quality Checks

In this tutorial, we build an advanced agentic AI workflow using LlamaIndex and OpenAI models. We focus on designing a reliable retrieval-augmented generation (RAG) agent that can reason over evidence, use tools deliberately, and evaluate its own outputs for quality. By structuring the system around retrieval, answer synthesis, and self-evaluation, we demonstrate how agentic patterns go beyond simple chatbots and move toward more trustworthy, controllable AI systems suitable for research and analytical use cases.

import os

import asyncio

import nest_asyncio

nest_asyncio.apply()

from getpass import getpass

if not os.environ.get(“OPENAI_API_KEY”):

os.environ[“OPENAI_API_KEY”] = getpass(“Enter OPENAI_API_KEY: “)

We set up the environment and install all required dependencies for running an agentic AI workflow. We securely load the OpenAI API key at runtime, ensuring that credentials are never hardcoded. We also prepare the notebook to handle asynchronous execution smoothly.

from llama_index.llms.openai import OpenAI

from llama_index.embeddings.openai import OpenAIEmbedding

Settings.llm = OpenAI(model=”gpt-4o-mini”, temperature=0.2)

Settings.embed_model = OpenAIEmbedding(model=”text-embedding-3-small”)

texts = [

“Reliable RAG systems separate retrieval, synthesis, and verification. Common failures include hallucination and shallow retrieval.”,

“RAG evaluation focuses on faithfulness, answer relevancy, and retrieval quality.”,

“Tool-using agents require constrained tools, validation, and self-review loops.”,

“A robust workflow follows retrieve, answer, evaluate, and revise steps.”

]

docs = [Document(text=t) for t in texts]

index = VectorStoreIndex.from_documents(docs)

query_engine = index.as_query_engine(similarity_top_k=4)

We configure the OpenAI language model and embedding model and build a compact knowledge base for our agent. We transform raw text into indexed documents so that the agent can retrieve relevant evidence during reasoning.

faith_eval = FaithfulnessEvaluator(llm=Settings.llm)

rel_eval = RelevancyEvaluator(llm=Settings.llm)

def retrieve_evidence(q: str) -> str:

r = query_engine.query(q)

out = []

for i, n in enumerate(r.source_nodes or []):

out.append(f”[{i+1}] {n.node.get_content()[:300]}”)

return “\n”.join(out)

def score_answer(q: str, a: str) -> str:

r = query_engine.query(q)

ctx = [n.node.get_content() for n in r.source_nodes or []]

f = faith_eval.evaluate(query=q, response=a, contexts=ctx)

r = rel_eval.evaluate(query=q, response=a, contexts=ctx)

return f”Faithfulness: {f.score}\nRelevancy: {r.score}”

We define the core tools used by the agent: evidence retrieval and answer evaluation. We implement automatic scoring for faithfulness and relevancy so the agent can judge the quality of its own responses.

from llama_index.core.workflow import Context

agent = ReActAgent(

tools=[retrieve_evidence, score_answer],

llm=Settings.llm,

system_prompt=”””

Always retrieve evidence first.

Produce a structured answer.

Evaluate the answer and revise once if scores are low.

“””,

verbose=True

)

ctx = Context(agent)

We create the ReAct-based agent and define its system behavior, guiding how it retrieves evidence, generates answers, and revises results. We also initialize the execution context that maintains the agent’s state across interactions. It step brings together tools and reasoning into a single agentic workflow.

q = f”Design a reliable RAG + tool-using agent workflow and how to evaluate it. Topic: {topic}”

handler = agent.run(q, ctx=ctx)

async for ev in handler.stream_events():

print(getattr(ev, “delta”, “”), end=””)

res = await handler

return str(res)

topic = “RAG agent reliability and evaluation”

loop = asyncio.get_event_loop()

result = loop.run_until_complete(run_brief(topic))

print(“\n\nFINAL OUTPUT\n”)

print(result)

We execute the full agent loop by passing a topic into the system and streaming the agent’s reasoning and output. We allow the agent to complete its retrieval, generation, and evaluation cycle asynchronously.

In conclusion, we showcased how an agent can retrieve supporting evidence, generate a structured response, and assess its own faithfulness and relevancy before finalizing an answer. We kept the design modular and transparent, making it easy to extend the workflow with additional tools, evaluators, or domain-specific knowledge sources. This approach illustrates how we can use agentic AI with LlamaIndex and OpenAI models to build more capable systems that are also more reliable and self-aware in their reasoning and responses.

Check out the FULL CODES here. Also, feel free to follow us on Twitter and don’t forget to join our 100k+ ML SubReddit and Subscribe to our Newsletter. Wait! are you on telegram? now you can join us on telegram as well.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.