Apple Researchers Unveil DeepPCR: A Novel Machine Learning Algorithm that Parallelizes Typically Sequential Operations in Order to Speed Up Inference and Training of Neural Networks

Several new innovations have been made possible because of the advancements in the field of Artificial intelligence and Deep Learning. Complex tasks like text or picture synthesis, segmentation, and classification are being successfully handled with the help of neural networks. However, it can take days or weeks to obtain adequate results from neural network training due to its computing demands. The inference in pre-trained models is also sometimes slow, particularly for intricate designs.

Parallelization techniques speed up training and inference in deep neural networks. Even though these methods are being used widely, some operations in neural networks are still done in a sequential manner. The diffusion models generate outputs through a succession of denoising stages, and the forward and backward passes happen layer by layer. As the number of steps rises, the sequential execution of these processes becomes computationally expensive, potentially resulting in a computational bottleneck.

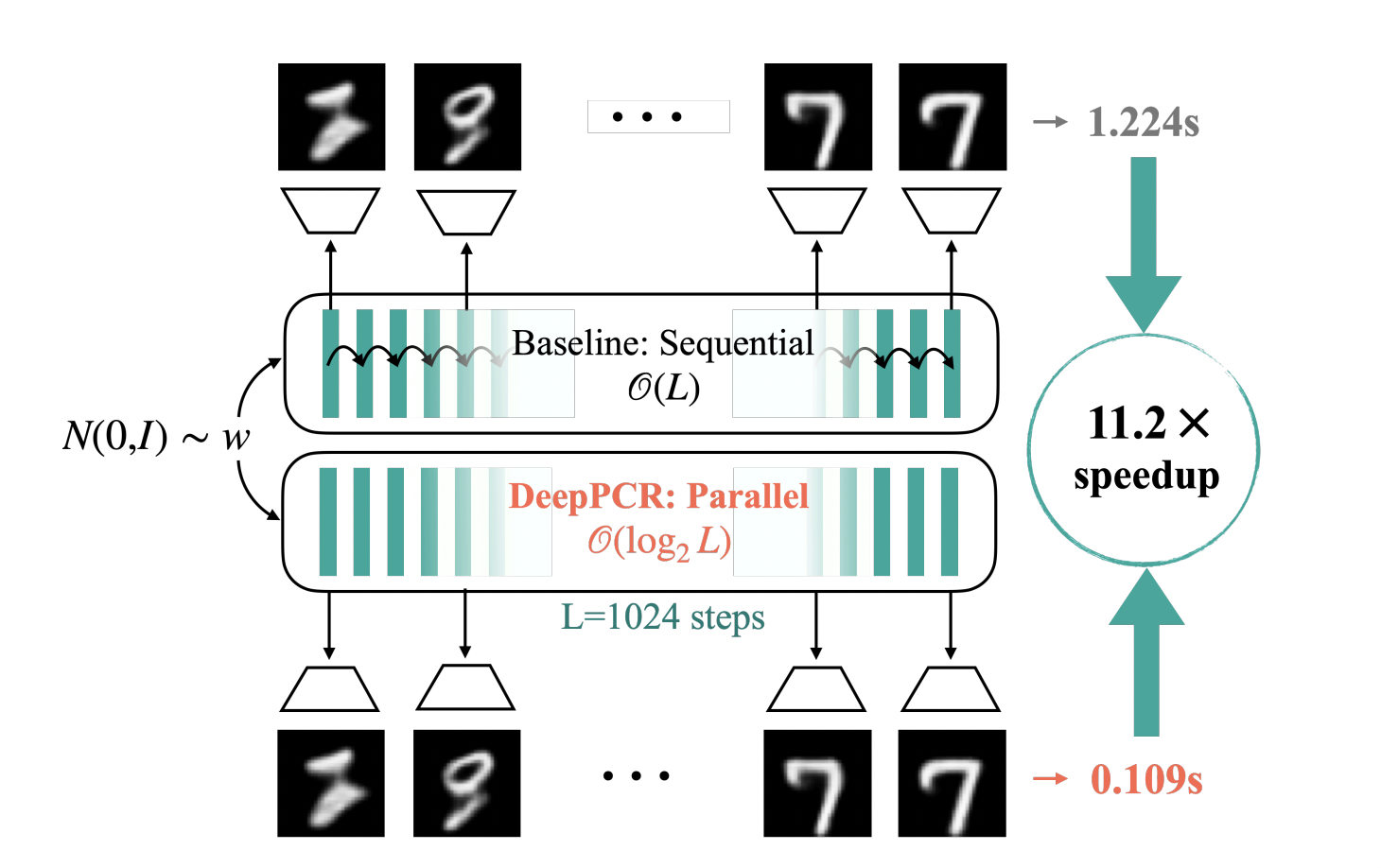

To address this issue, a team of researchers from Apple has introduced DeepPCR, a unique algorithm that seeks to speed up neural network training and inference. DeepPCR functions by perceiving a series of L steps as the answer to a certain set of equations. The team has employed the Parallel Cyclic Reduction (PCR) algorithm to retrieve this solution. Reducing the computational cost of sequential processes from O(L) to O(log2 L) is the primary advantage of DeepPCR. Speed is increased as a result of this reduction in complexity, especially for high values of L.

The team has conducted experiments to verify the theoretical assertions about DeepPCR’s decreased complexity and to determine the conditions for speedup. They achieved speedups of up to 30× for the forward pass and 200× for the backward pass by applying DeepPCR to parallelize the forward and backward pass in multi-layer perceptrons.

The team has also demonstrated the adaptability of DeepPCR by using it to train ResNets, which have 1024 layers. The training can be completed up to 7 times faster because of DeepPCR. The technique is used for diffusion models’ generation phase, producing an 11× faster generation than the sequential approach.

The team has summarized their primary contributions as follows.

- DeepPCR, which is an innovative approach for parallelizing sequential processes in neural network training and inference, has been introduced. Its primary feature is its capacity to lower the computational complexity from O(L) to O(log2 L), where L is the sequence length.

- DeepPCR has been used to parallelize the forward and backward passes in multi-layer perceptrons (MLPs). Extensive analysis of the technology’s performance has also been conducted to pinpoint the method’s high-performance regimes while taking basic design parameters into account. The study also investigates the trade-offs between speed, correctness of the solution, and memory usage.

- DeepPCR has been used to speed up deep ResNet training on MNIST and generation in Diffusion Models trained on MNIST, CIFAR-10, and CelebA datasets. The results have shown that while DeepPCR shows a significant speedup, recovering data improvement to 7× faster for ResNet training and 11× faster for Diffusion Model creation, it still produces results comparable to sequential techniques.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 34k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.