Can AI Really Understand Sarcasm? This Paper from NYU Explores Advanced Models in Natural Language Processing

Natural Language Processing (NLP) is useful in many fields, bringing about transformative communication, information processing, and decision-making changes. It is being widely used for sarcasm detection, too. However, Sarcasm detection is challenging because of the intricate relationships between the speaker’s true feelings and their stated words. Also, its contextual character makes identifying sarcasm difficult, which calls for examining the speaker’s tone and intention. Irony and sarcasm are common in online posts, particularly in reviews and comments, and they may serve as false models for the true sentiments communicated.

Consequently, a recent study by a researcher at New York University delved into the performance of two LLMs specifically trained for sarcasm detection. The study emphasizes the necessity of correctly identifying sarcasm to understand opinions. Previously, models focused on analyzing language in isolation. Still, due to the contextual nature of sarcasm, language representation models such as Support Vector Machines (SVM) and Long Short-Term Memory (LSTM) gained prominence.

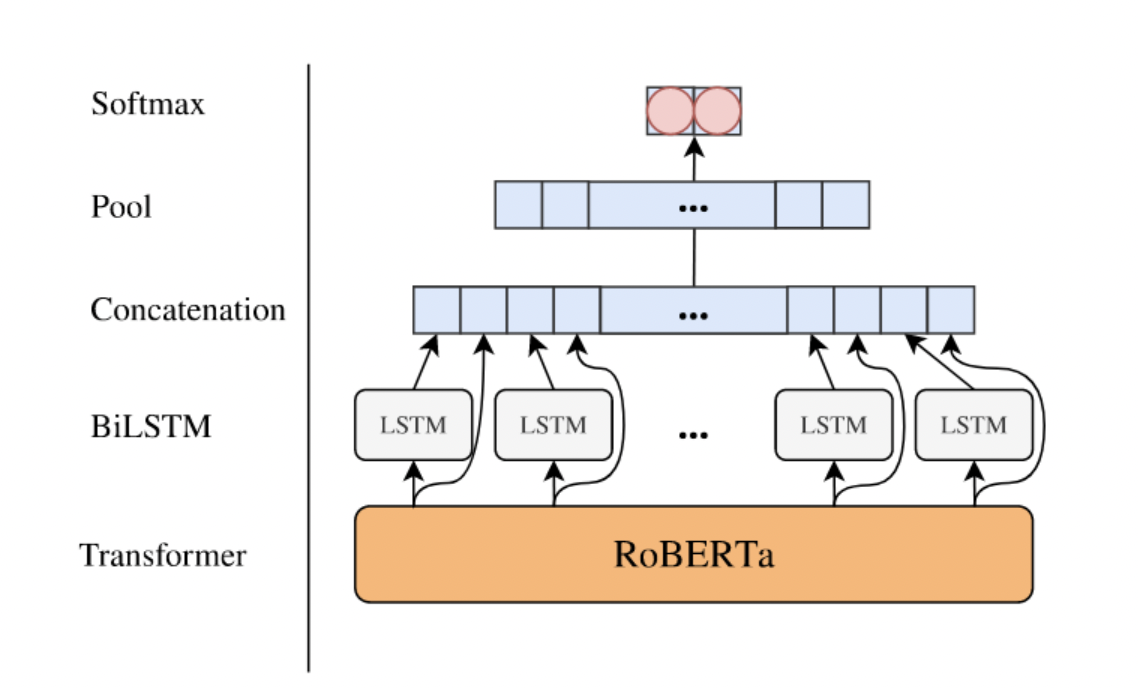

The researcher studied this field by analyzing texts from social media platforms to gauge public sentiments. This is particularly crucial as reviews and comments online often employ sarcasm, potentially misleading models into misclassifying them based on emotional tone. To tackle these issues, researchers have started creating sarcasm detection models. The two most significant models are CASCADE and RCNN-RoBERTa. The study used these models to evaluate their ability to identify sarcasm on Reddit posts.

The researchers’ evaluation process has a contextual-based approach considering user personality, stylometrics, and discourse features and a deep learning approach using the RoBERTa model. The study found that adding contextual information like user personality embeddings significantly enhances performance compared to traditional methods.

The researcher also emphasized the efficacy of contextual and transformer-oriented methods, opining that including supplementary contextual attributes into transformers may represent a viable direction for subsequent research. The

researcher said that these results may contribute to advancing LLMs skilled in identifying sarcasm in human discourse. Accurate comprehension of user-generated information is ensured by the capacity to recognize sarcasm, which provides a nuanced viewpoint on the emotions expressed in reviews and postings.

In conclusion, the study is a significant step for effective sarcasm detection in NLP. By combining contextual information and leveraging advanced models, researchers are inching closer to enhancing the capabilities of language models, ultimately contributing to more accurate analyses of human expression in the digital age. This research has important implications for improving LLMs’ capability to recognize sarcasm in human languages. Such enhanced models would benefit businesses seeking rapid sentiment analyses of customer feedback, social media interactions, and other forms of user-created material.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 35k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, LinkedIn Group, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Rachit Ranjan is a consulting intern at MarktechPost . He is currently pursuing his B.Tech from Indian Institute of Technology(IIT) Patna . He is actively shaping his career in the field of Artificial Intelligence and Data Science and is passionate and dedicated for exploring these fields.