AI-Assisted Causal Inference: Using LLMs to Revolutionize Instrumental Variable Selection

Endogeneity presents a significant challenge in conducting causal inference in observational settings. Researchers in social sciences, statistics, and related fields have developed various identification strategies to overcome this obstacle by recreating natural experiment conditions. The instrumental variables (IV) method has emerged as a leading approach, with researchers discovering IVs in diverse settings and justifying their adherence to exclusion restrictions. However, these exclusion restrictions are fundamentally untestable assumptions, often relying on rhetorical arguments specific to each context. The process of identifying potential IVs demands researchers’ counterfactual reasoning, creativity, and sometimes luck, contributing to the heuristic nature of human-led research. This subjective and non-statistical approach to IV selection and justification highlights the need for more rigorous and systematic methods in causal inference.

Large Language Models (LLMs) have emerged as a promising tool for discovering new IVs in causal inference research. A researcher from the University of Bristol shows that these AI systems, with their advanced language processing capabilities, can assist in searching for valid IVs and provide rhetorical justifications, similar to human researchers but at an exponentially faster rate. LLMs can explore a vast search space, conduct systematic hypothesis searches, and engage in counterfactual reasoning, making them well-suited for causal inference tasks. This AI-assisted approach offers several benefits: it enables rapid, systematic searches adaptable to specific research settings, increases the likelihood of obtaining multiple IVs for formal validity testing, and enhances the chances of finding or guiding the construction of relevant data containing IVs. The proposed method involves carefully constructing prompts that guide LLMs in searching for valid IV candidates, incorporating verbal translations of exclusion restrictions and employing role-playing techniques to mimic agents’ decision-making processes.

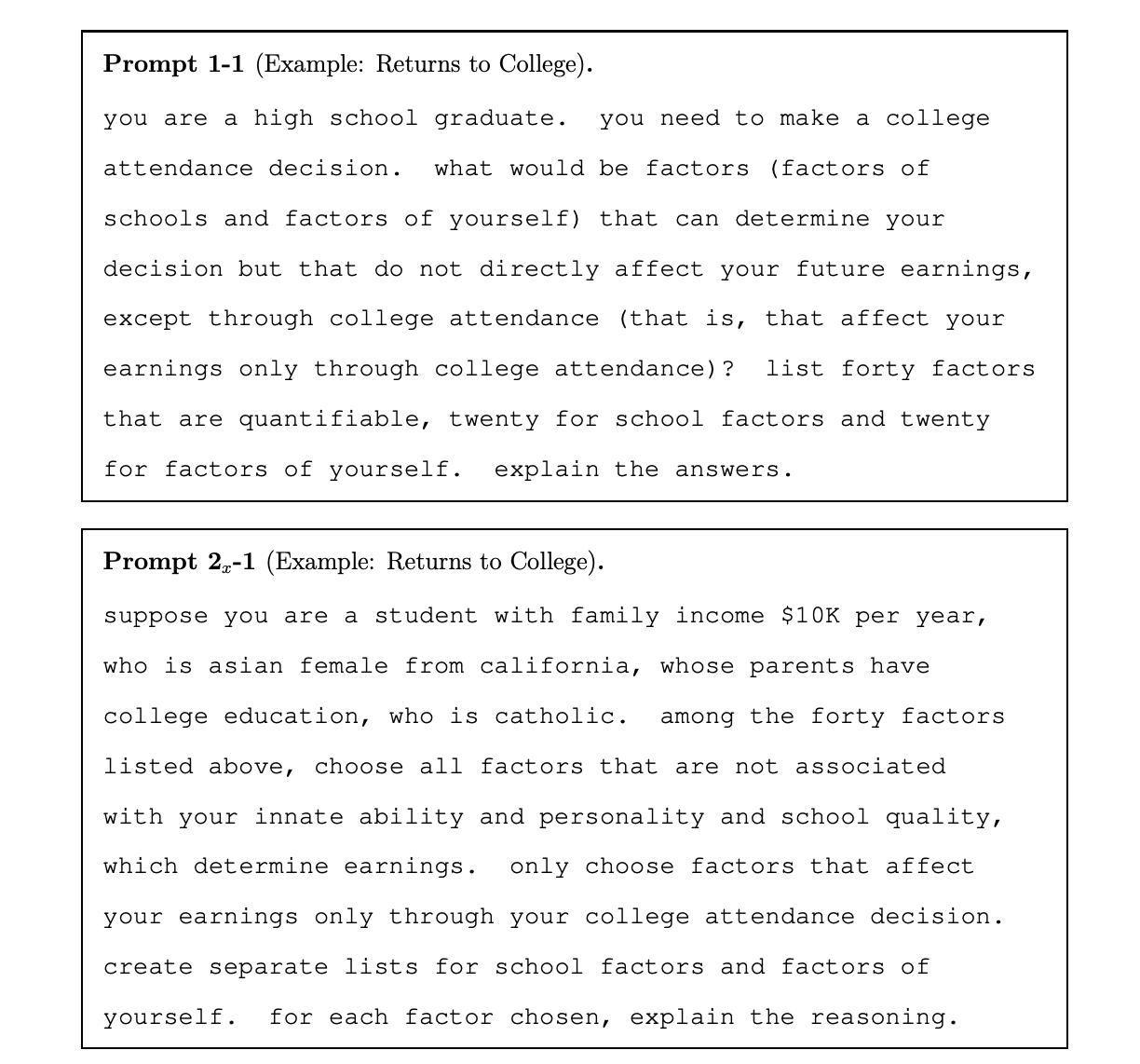

The proposed methodology employs OpenAI’s ChatGPT-4 (GPT4) to discover IVs in three well-known examples from empirical economics: returns to schooling, production functions, and peer effects. The approach involves constructing specific prompts that guide GPT4 in searching for valid IV candidates, incorporating verbal translations of exclusion restrictions, and using role-playing techniques to simulate agents’ decision-making processes. This method has successfully generated lists of candidate IVs, including both unique suggestions and popularly used variables in the literature, along with rationales for their validity. The concept extends beyond IV discovery to other causal inference methods, such as searching for control variables in regression and difference-in-differences methods and identifying running variables in regression discontinuity designs. While the generated lists are not definitive, they serve as valuable benchmarks to inspire researchers about potential variables and domains to explore. The dialogue with GPT4 can also help researchers refine arguments for variable validity, emphasizing the collaborative potential between human researchers and AI in enhancing causal inference methodologies.

The proposed methodology employs a two-step approach for IV discovery using LLMs. In Step 1, the LLM is prompted to search for IVs that satisfy verbal descriptions of exclusion restriction (i) and relevance condition. Step 2 refines the search by selecting IVs from Step 1 that meet the verbal description of exclusion restriction (ii). Both steps involve counterfactual statements and require the LLM to provide rationales for its responses. This two-step approach offers several advantages: it improves LLM performance by breaking down complex tasks, allows for user inspection of intermediate outputs, and provides valuable insights through these intermediate results. The prompts are initially constructed without covariates for simplicity, with more realistic prompts incorporating covariates introduced later. This method creates a flexible framework for IV discovery, allowing for fine-tuning and adaptation to specific research contexts while maintaining a systematic approach to causal inference.

This research serves as a foundation for integrating LLMs into instrumental variable discovery in causal inference. Future directions for sophistication include incorporating known IVs from literature to guide LLMs in discovering new ones, potentially utilizing few-shot learning to enhance performance. Also, exploring methods to aggregate results across multiple LLM sessions could account for and exploit the inherent randomness in LLM outputs. These advancements could lead to more robust and comprehensive IV discovery processes. As AI continues to evolve, the collaboration between human researchers and AI systems in causal inference methodologies promises to open new avenues for more efficient and insightful empirical research in economics and related fields.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 50k+ ML SubReddit

Interested in promoting your company, product, service, or event to over 1 Million AI developers and researchers? Let’s collaborate!

Asjad is an intern consultant at Marktechpost. He is persuing B.Tech in mechanical engineering at the Indian Institute of Technology, Kharagpur. Asjad is a Machine learning and deep learning enthusiast who is always researching the applications of machine learning in healthcare.