Deciphering Auditory Processing: How Deep Learning Models Mirror Human Speech Recognition in the Brain

Research states computations converting auditory data into linguistic representations are involved in voice perception. The auditory pathway is activated when someone listens to speech, including the primary and nonprimary auditory cortical regions, the auditory nerve, and subcortical structures. Due to environmental circumstances and changing auditory signals for linguistic perceptual units, natural speech perception is a difficult undertaking. While classical cognitive models explain many psychological features of speech perception, these models fall short in explaining brain coding and natural speech recognition. Deep learning models are getting close to human performance in automated speech recognition.

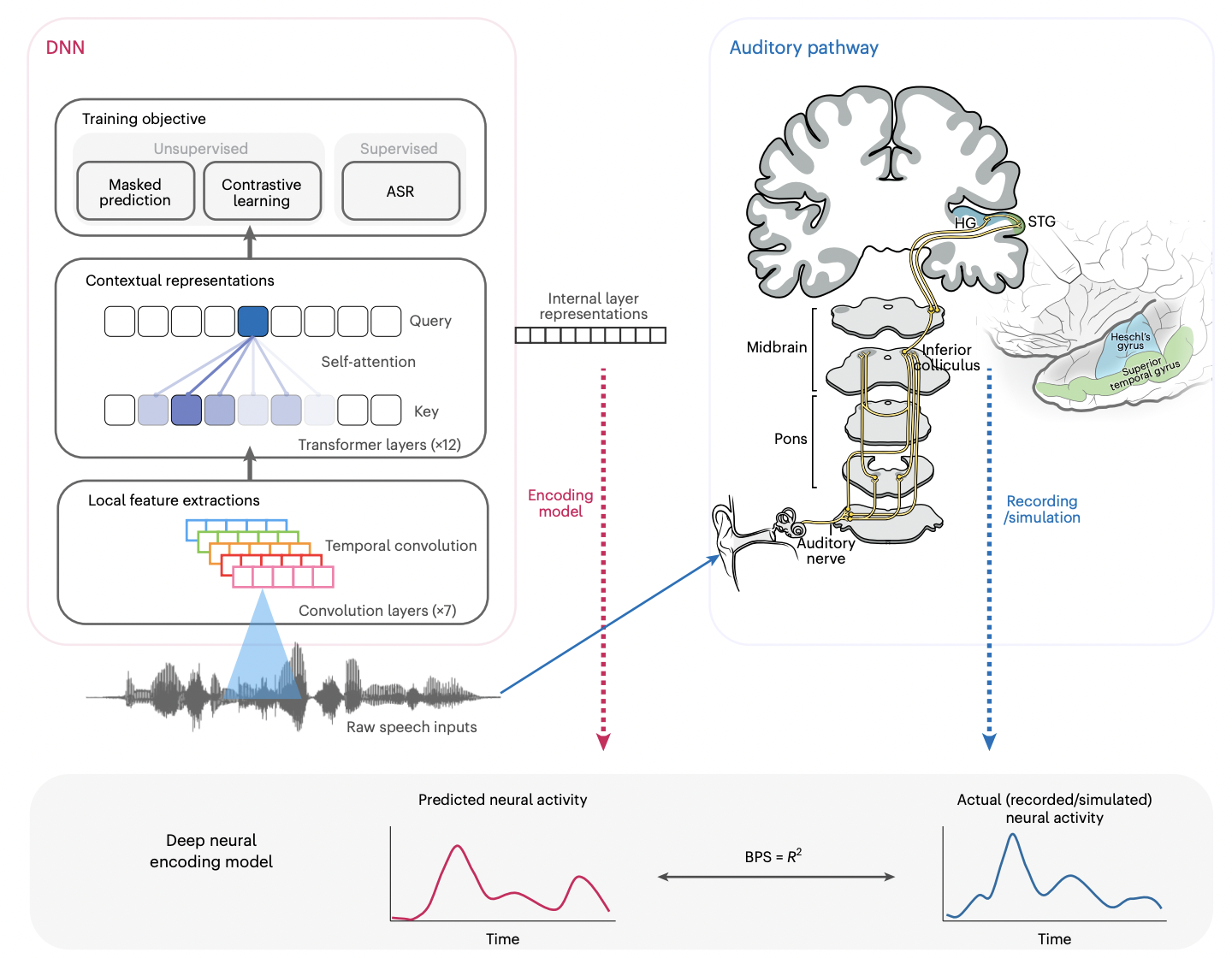

To improve the interpretability of AI models and provide novel data-driven computational models of sensory perception, researchers at the University of California, San Francisco, aim to correlate deep learning model computations and representations with the neural responses of the human hearing system. It aims to identify common representations and computations between the human auditory circuit and state-of-the-art neural network models of speech. The analysis focuses on the Deep Neural Network (DNN) speech embeddings correlating to the neural responses to genuine speech along the ascending auditory pathway and using a framework for neural encoding.

The auditory circuit and Deep Neural Network (DNN) models with various computational architectures (convolution, recurrence, and self-attention) and training procedures (supervised and unsupervised goals) are compared methodically. Moreover, examining DNN computations provides information on the fundamental processes that underlie neural encoding predictions. In contrast to earlier modeling attempts that concentrated on a single language, mostly English, they reveal language-specific and language-invariant features of speech perception in their study work using a cross-linguistic paradigm.

It’s fascinating that researchers have shown how speech representations acquired in cutting-edge DNNs closely mimic key information processing elements in the human auditory system. When predicting neuronal responses to genuine speech throughout the auditory pathway, Deep Neural Network (DNN) feature representations perform noticeably better than theory-driven acoustic-phonetic feature sets. Additionally, they examined the fundamental contextual computations in Deep Neural Networks (DNNs). They discovered that entirely unsupervised natural speech training is how these networks acquire crucial temporal structures related to language, such as phoneme and syllable contexts. This capacity to acquire language-specific linguistic information predicts DNN–neural coding correlation in the nonprimary auditory cortex. While linear STRF models cannot disclose language-specific coding in the STG during cross-language perception, Deep learning-based neural encoding models can.

To sum it up,

Using a comparative methodology, researchers demonstrate significant representational and computational similarities between speech-learning Deep Neural Networks (DNNs) and the human auditory system. From a neuroscientific point of view, classic feature-based encoding models are surpassed by data-driven computational models in extracting intermediate speech characteristics from statistical structures. By contrasting them with neural responses and selectivity, they provide a means of comprehending the “black box” representations of DNNs from an AI standpoint. They demonstrate how contemporary DNNs could have settled on representations that resemble how the human auditory system processes information. As per researchers, future studies might investigate and validate these results using a wider range of AI models and bigger and more varied populations.

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.