Liquid AI Introduces Liquid Foundation Models (LFMs): A 1B, 3B, and 40B Series of Generative AI Models

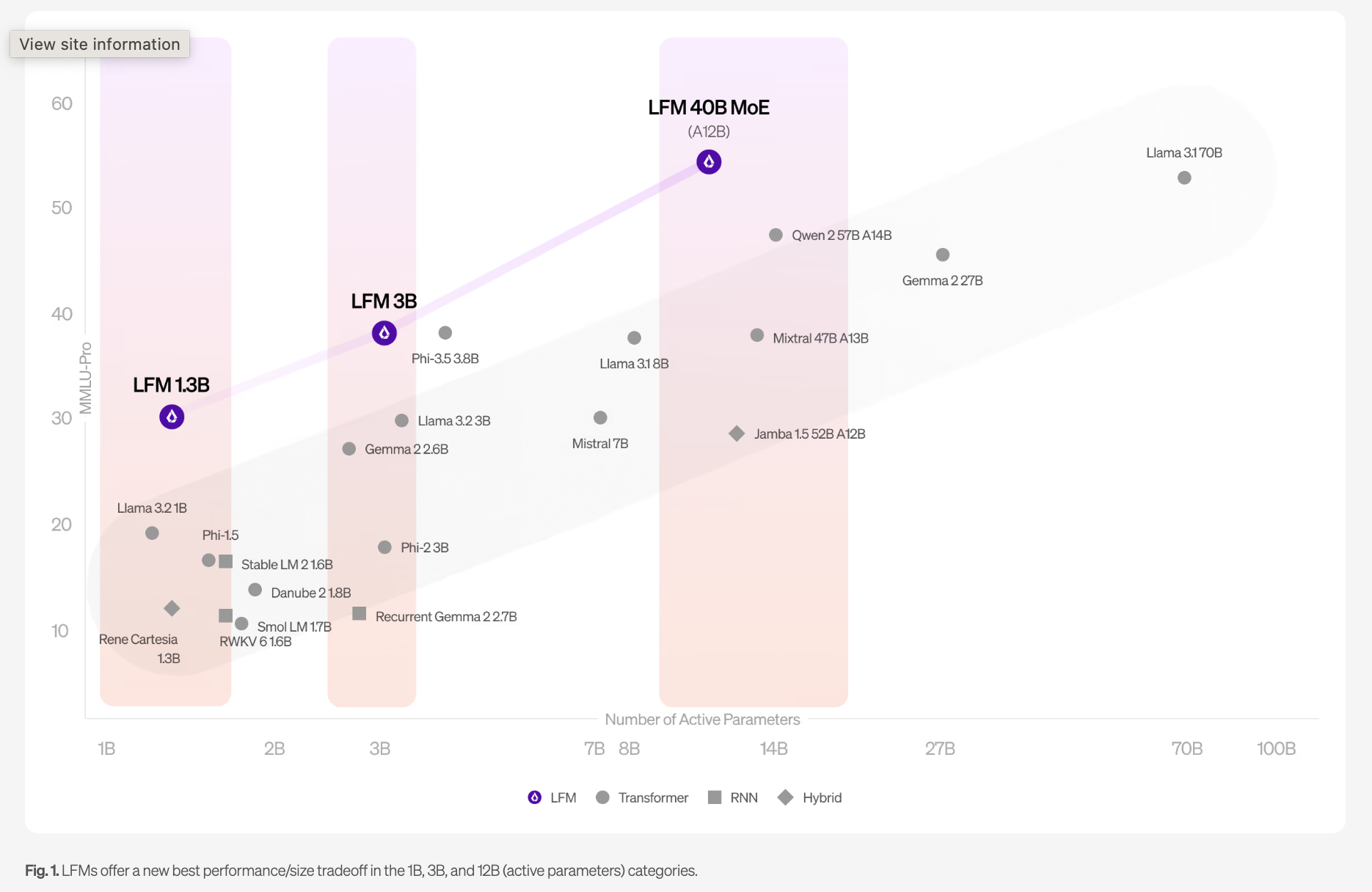

Liquid AI has released its first series of Liquid Foundation Models (LFMs), ushering in a new generation of generative AI models. These models are positioned as a new benchmark for performance and efficiency at multiple scales, namely the 1B, 3B, and 40B parameter configurations. This series aims to set a new standard for generative AI models by achieving state-of-the-art performance in various benchmarks while maintaining a smaller memory footprint and more efficient inference capabilities.

The first series of LFMs comprises three main models:

- LFM-1B: A 1 billion parameter model that offers cutting-edge performance for its size category. It has achieved the highest scores across various benchmarks in its class, surpassing many transformer-based models despite not being built on the widely used GPT architecture.

- LFM-3B: A 3 billion parameter model ideal for mobile and edge applications. It not only outperforms its direct competitors in terms of efficiency and speed but also positions itself as a worthy contender against models in higher parameter ranges, such as 7B and 13B models from previous generations.

- LFM-40B: A 40 billion parameter Mixture of Experts (MoE) model designed for more complex tasks. This model balances its performance and output quality against even larger models due to its advanced architecture, which allows for selective activation of model segments depending on the task, thereby optimizing computational efficiency.

Architectural Innovations and Design Principles

The LFMs are built from first principles, focusing on designing powerful AI systems that offer robust control over their capabilities. According to Liquid AI, these models are constructed using computational units deeply rooted in dynamical systems, signal processing, and numerical linear algebra theories. This unique blend allows LFMs to leverage theoretical advancements across these fields to build general-purpose AI models capable of handling sequential data types, such as video, audio, text, and time series.

The design of LFMs emphasizes two primary aspects: featurization and footprint. Featurization is converting input data into a structured set of features or vectors used to modulate computation inside the model in an adaptive manner. For instance, audio and time series data generally require less featurization in operators due to lower information density compared to language and multi-modal data.

The LFM stack is being optimized for deployment on various hardware platforms, including NVIDIA, AMD, Qualcomm, Cerebras, and Apple. This optimization enables performance improvements across different deployment environments, from edge devices to large-scale cloud infrastructures.

Performance Benchmarks and Comparison

The initial benchmarks for the LFMs show impressive results compared to similar models. The 1B model, for instance, outperformed several transformer-based models in terms of the Multi-Modal Learning and Understanding (MMLU) scores and other benchmark metrics. Similarly, the 3B model’s performance has been likened to models in the 7B and 13B categories, making it highly suitable for resource-constrained environments.

The 40B MoE model, on the other hand, offers a new balance between model size and output quality. This model’s architecture leverages a unique mixture of experts to allow higher throughput and deployment on cost-effective hardware. It achieves performance comparable to larger models due to its efficient utilization of the MoE architecture.

Key Strengths and Use Cases

Liquid AI has highlighted several areas where LFMs demonstrate significant strengths, including general and expert knowledge, mathematics and logical reasoning, and efficient long-context tasks. The models also offer robust multilingual capabilities, supporting Spanish, French, German, Chinese, Arabic, Japanese, and Korean languages. However, LFMs are less effective at zero-shot code tasks and precise numerical calculations. This gap is expected to be addressed in future iterations of the models.

LFMs have also been optimized to handle longer context lengths more effectively than traditional transformer models. For example, the models can process up to 32k tokens in context, which makes them particularly effective for document analysis and summarization tasks, more meaningful interactions with context-aware chatbots, and improved Retrieval-Augmented Generation (RAG) performance.

Deployment and Future Directions

Liquid AI’s LFMs are currently available for testing and deployment on several platforms, including Liquid Playground, Lambda (Chat UI and API), Perplexity Labs, and soon on Cerebras Inference. Liquid AI’s roadmap suggests that it will continue to optimize and release new capabilities in the upcoming months, extending the range and applicability of the LFMs to various industries, such as financial services, biotechnology, and consumer electronics.

Regarding deployment strategy, the LFMs are designed to be adaptable across multiple modalities and hardware requirements. This adaptability is achieved through adaptive linear operators that are structured to respond dynamically based on inputs. Such flexibility is critical for deploying these models in environments ranging from high-end cloud servers to more resource-constrained edge devices.

Conclusion

Liquid AI’s first series of Liquid Foundation Models (LFMs) represents a promising step forward in developing generative AI models. LFMs aim to redefine what is possible in AI model design and deployment by achieving superior performance and efficiency. While these models are not open-sourced and are only available as part of a controlled release, their unique architecture and innovative approach position them as significant contenders in the AI landscape.

Check out the Details. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Want to get in front of 1 Million+ AI Readers? Work with us here

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.