Meet Laminar AI: A Developer Platform that Combines Orchestration, Evaluations, Data, and Observability to Empower AI Developers to Ship Reliable LLM Applications 10x Faster

Because LLMs are inherently random, building reliable software (like LLM agents) requires continuous monitoring, a systematic approach to testing modifications, and quick iteration on fundamental logic and prompts. Current solutions are vertical, and developers still have to worry about keeping the “glue” between them, which will slow them down.

Laminar is an AI developer platform that streamlines the process of delivering dependable LLM apps ten times faster by integrating orchestration, assessments, data, and observability. Laminar’s graphical user interface (GUI) allows LLM applications to be built as dynamic graphs that seamlessly interface with local code. Developers can immediately import an open-source package that generates code without abstractions from these graphs. Moreover, Laminar offers a data infrastructure with integrated support for vector search across datasets and files and a state-of-the-art evaluation platform that enables developers to create unique evaluators quickly and easily without having to manage the evaluation infrastructure themselves.

A self-improving data flywheel can be created when data is easily absorbed into LLMs and LLMs write to datasets. Laminar provides a low-latency logging and observability architecture. An excellent LLM “IDE” has been developed by the Laminar AI team. With this IDE, you may construct LLM applications as dynamic graphs.

Integrating graphs with local code is a breeze. A “function node” can access server-side functions using the user interface or software development kit. The testing of LLM agents, which invoke various tools and then loop back to LLMs with the response, is completely transformed by this. User have complete control over the code since it is created as pure functions within the repository. Developers who are sick of frameworks with many abstraction levels will find it invaluable. The proprietary async engine, built in Rust, executes pipelines. As scalable API endpoints, they are easily deployable.

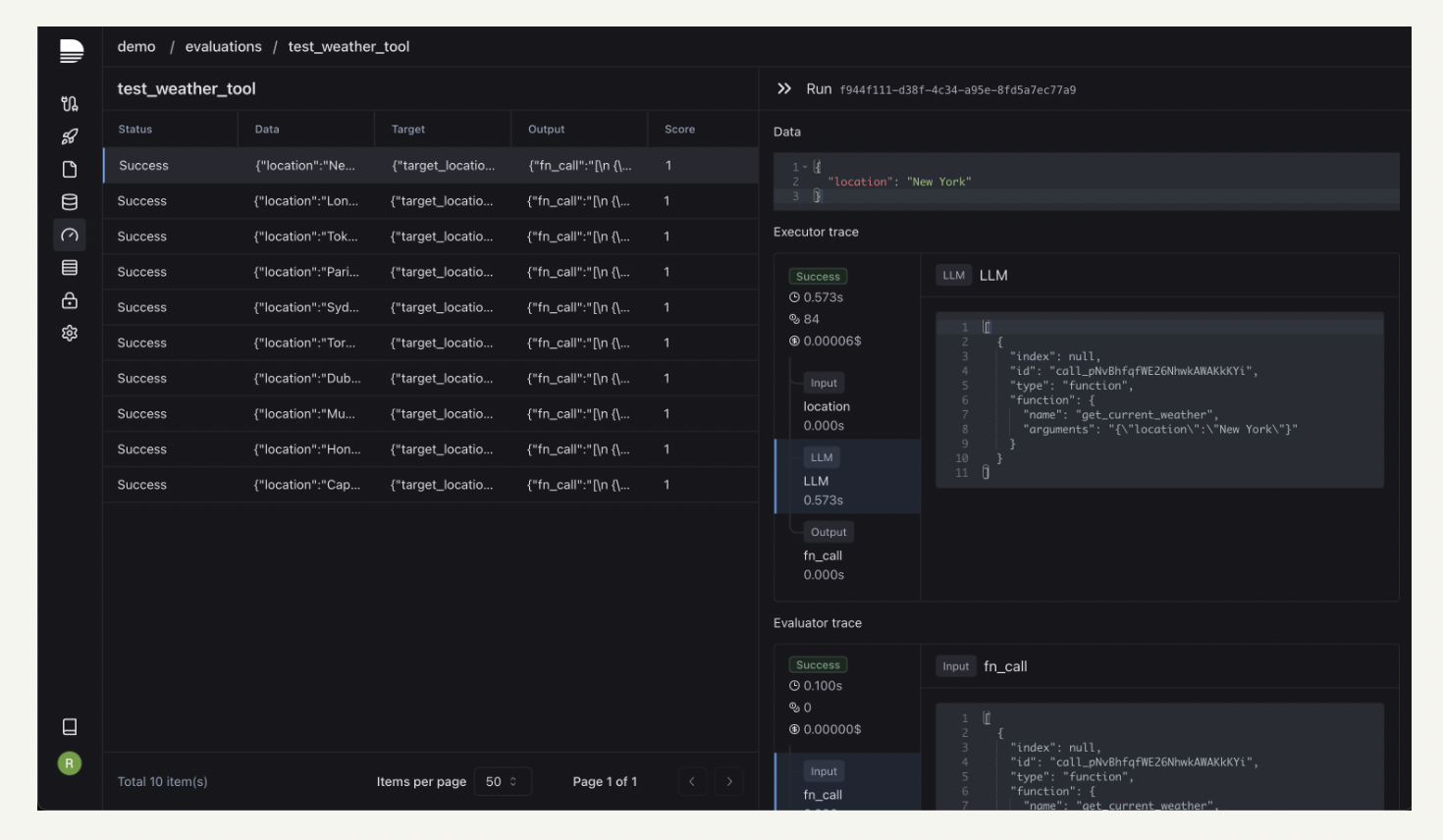

Customizable and adaptable evaluation pipelines that integrate with local code are easy to construct with a laminar pipeline builder. A simple task like precise matching can provide a foundation for a more complex, application-specific LLM-as-a-judge pipeline. User can simultaneously run evaluations on thousands of data points, upload massive datasets, and get all run statistics in real-time. Without the hassle of taking on evaluation infrastructure management on their own, and get all of this.

Whether users host LLM pipelines on the platform or create code from graphs, they can analyze the traces in the easy UI. Laminar AI log all pipeline runs. User may view comprehensive traces of each pipeline run, and all endpoint requests are logged. To minimize latency overhead, logs are written asynchronously.

Key Features

- Semantic search across datasets with full management. Vector databases, embeddings, and chunking are all under the purview.

- Code in the unique way while having full access to all of Python’s standard libraries.

- Conveniently choose between many models, like GPT-4o, Claude, Llama3, and many more.

- Create and test pipelines collaboratively using knowledge of tools similar to Figma.

- A smooth integration of graph logic with local code execution. Intervene between node executions by calling local functions.

- The user-friendly interface makes constructing and debugging agents with many calls to local functions easy.

In Conclusion

Among the many obstacles encountered by programmers creating LLM apps, Laminar AI stands out as a potentially game-changing technology. Laminar AI allows developers to develop LLM agents more quickly than ever by providing a unified assessment, orchestration, data management, and observability solution. With the increasing demand for apps driven by LLM, platforms such as Laminar AI will play a crucial role in propelling innovation and molding the trajectory of AI in the future.

Dhanshree Shenwai is a Computer Science Engineer and has a good experience in FinTech companies covering Financial, Cards & Payments and Banking domain with keen interest in applications of AI. She is enthusiastic about exploring new technologies and advancements in today’s evolving world making everyone’s life easy.