Semantic Hearing: A Machine Learning-Based Novel Capability for Hearable Devices to Focus on or Ignore Specific Sounds in Real Environments while Maintaining Spatial Awareness

Researchers from the University of Washington and Microsoft have introduced a cutting-edge concept: noise-canceling headphones with semantic hearing capabilities driven by advanced machine learning algorithms. This innovation empowers wearers to cherry-pick the sounds they wish to hear while eliminating all other auditory distractions.

The team elaborated on the central hurdle that propelled their innovative endeavor. They highlighted the problem in current noise-canceling headphones, emphasizing their inability to possess the necessary real-time intelligence for discerning and isolating specific sounds from the ambient environment. Consequently, achieving seamless synchronization between the auditory experience of wearers and their visual perception emerges as a critical concern. Any delay in processing auditory stimuli is deemed unacceptable; it must happen almost instantaneously.

Unlike conventional noise-canceling headphones that primarily focus on muffling incoming sounds or filtering selected frequencies, this pioneering prototype takes a divergent approach. It employs a classification system for incoming sounds, allowing users to personalize their auditory experience by choosing what they want to hear.

The prototype’s potential was demonstrated through a series of trials. These ranged from holding conversations amidst vacuum cleaner noise to tuning out street chatter to focus on bird calls and even mitigating construction clatter while remaining attentive to traffic honks. The device facilitated meditation by silencing ambient noises, except for an alarm signaling the session’s end.

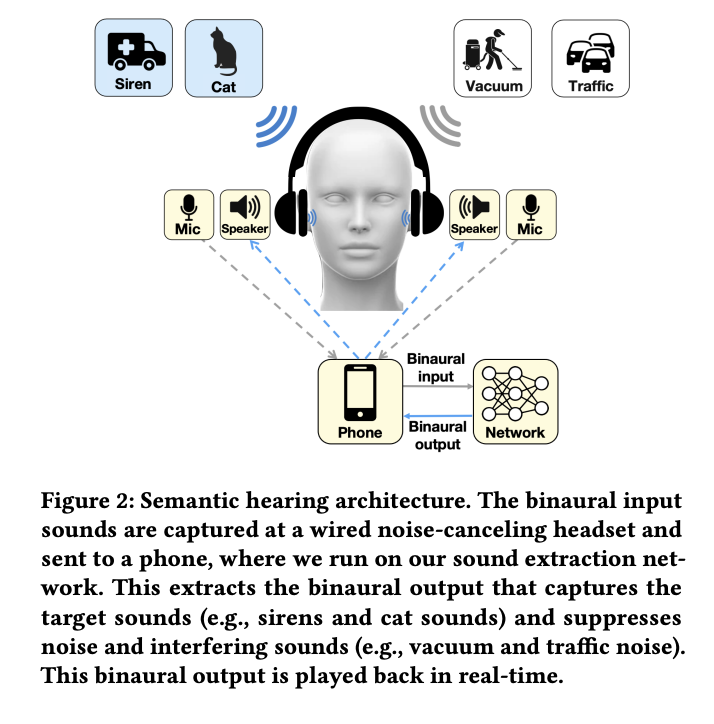

The crux of achieving rapid sound processing lies in leveraging a more potent device than what can be integrated into headphones: the user’s smartphone. This device hosts a specialized neural network explicitly designed for binaural sound extraction—a pioneering feat, according to the researchers.

During the experimentation, the team successfully operated with 20 distinct sound classes, showcasing that their transformer-based network executes within a mere 6.56 milliseconds on a connected smartphone. The real-world assessments in novel indoor and outdoor scenarios confirm the proof-of-concept system’s efficacy in extracting target sounds while preserving spatial cues in its binaural output.

This pioneering stride in noise-canceling technology holds vast promise for enhancing user experiences in diverse settings. By allowing individuals to curate their auditory environment in real time, these next-generation headphones transcend the limitations of their predecessors. As the team continues refining this innovation and prepares for code publication, the prospects for a future where personalized soundscapes are at our fingertips seem ever closer to reality.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Niharika is a Technical consulting intern at Marktechpost. She is a third year undergraduate, currently pursuing her B.Tech from Indian Institute of Technology(IIT), Kharagpur. She is a highly enthusiastic individual with a keen interest in Machine learning, Data science and AI and an avid reader of the latest developments in these fields.