This AI Paper Releases a Detailed Review of Open-Source Large Language Models that Claim to Catch up with or Surpass ChatGPT in Different Tasks

The recent release of ChatGPT last year has taken the Artificial Intelligence community by storm. Based on GPT’s transformer architecture, which is the latest Large Language Model, ChatGPT has had a significant impact on both academic and commercial applications. The chatbot can easily respond to humans, generate content, answer queries, and perform a range of tasks by utilizing the capabilities of Reinforcement Learning from Human Feedback (RLHF) and instruction-tuning through supervised fine-tuning.

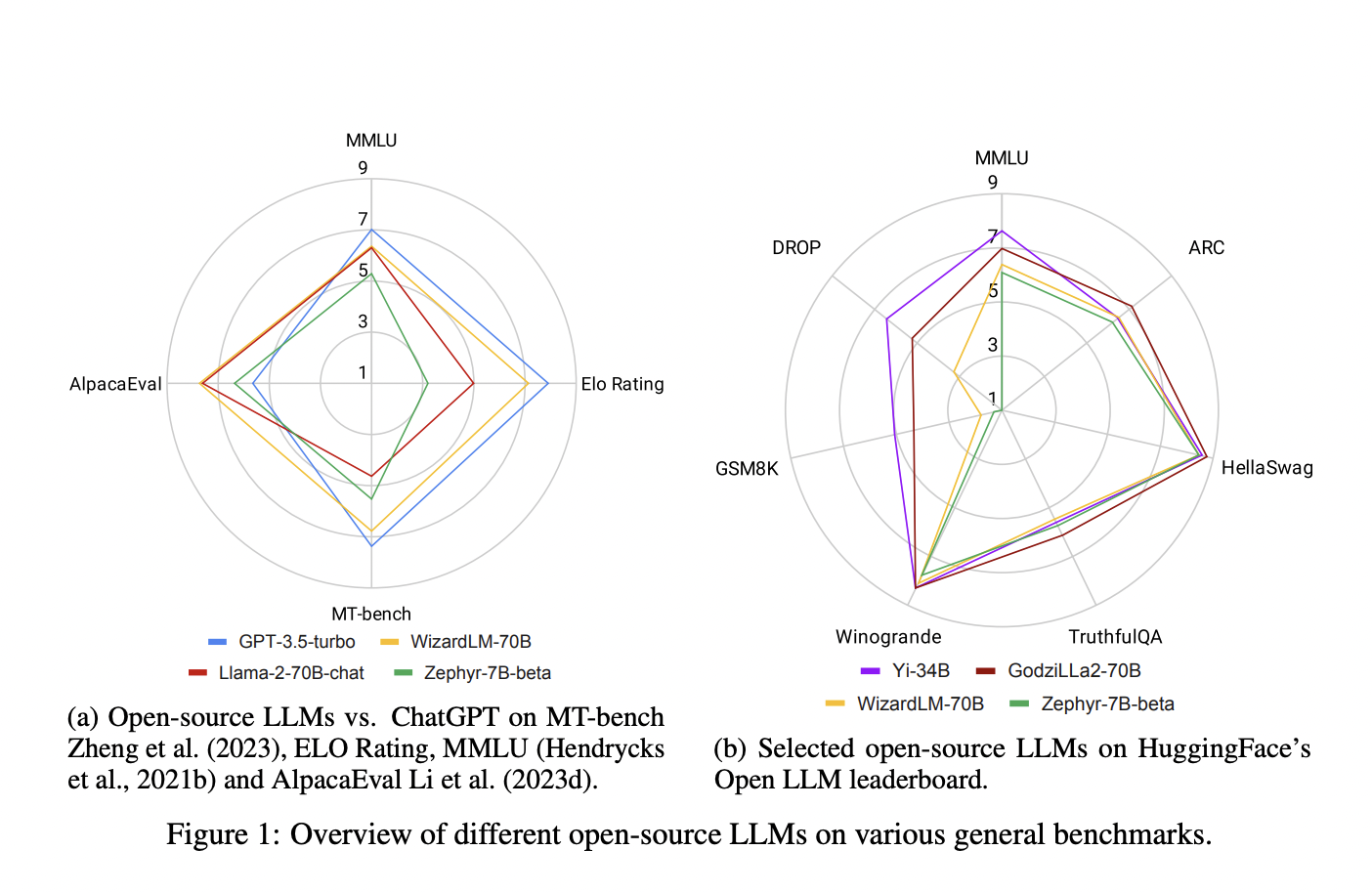

In recent research, a team of researchers from NTU Singapore, SalesForce AI and I2R has conducted an extensive survey in order to compile recent research on open-source Large Language Models (LLMs) and provide a complete overview of models that perform as well as or better than ChatGPT in a variety of contexts. The release and success of ChatGPT have led to an upsurge in LLM-related pursuits, as both academia and industry observed an abundance of new LLMs, frequently originating from startups devoted to this field.

Although closed-source LLMs like Anthropic’s Claude have generally done better than their open-source counterparts, models like OpenAI’s GPT have advanced far faster. There have been increasing claims of attaining equal or even better performance on certain tasks, which has put closed-source models’ historical dominance at risk.

In terms of research, the continuous release of new open-source LLMs and their alleged successes has forced a reassessment of the strengths and weaknesses of these models. The developments in open-source language modeling software have presented business-related challenges for organizations that wish to incorporate language models into their operations. Businesses now have more options and choices when it comes to choosing the best model for their unique requirements, thanks to the possibility of obtaining performance that is on par with or better than proprietary alternatives.

The team has shared three primary categories that can be used to characterize the contributions of their survey.

- Consolidation of Assessments: The survey has compiled a variety of assessments of open-source LLMs in order to offer an objective and thorough viewpoint on how these models differ from ChatGPT. This synthesis gives readers a comprehensive understanding of the advantages and disadvantages of open-source LLMs relative to the ChatGPT benchmark.

- Systematic Review of Models: Open-source LLMs have been examined that perform as well as or better than ChatGPT on various tasks. In addition, the team has shared their webpage, which they will keep updated in real-time so that the readers may see the latest changes, which reflects the dynamic nature of open-source LLM development.

- Advice and Insights: In addition to reviews and assessments, the poll provides insightful information about the patterns influencing the evolution of open-source LLMs. It has also discussed potential problems with these models and has explored best practices for educating open-source LLMs. These findings have offered a detailed perspective of the existing context and future potential of open-source LLMs, catering to both the corporate sector and the scholarly community.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.