This AI Paper Reveals the Superiority of Generalist Language Models Over Clinical Counterparts in Semantic Search Tasks

The accuracy of semantic search, especially in clinical contexts, hinges on the ability to interpret and link varied expressions of medical terminologies. This task becomes particularly challenging with short-text scenarios like diagnostic codes or brief medical notes, where precision in understanding each term is critical. The conventional approach has relied heavily on specialized clinical embedding models designed to navigate the complexities of medical language. These models transform text into numerical representations, enabling the nuanced understanding necessary for effective semantic search in healthcare.

Recent advancements in this domain have introduced a new player: generalist embedding models. Unlike their specialized counterparts, these models are not exclusively trained on medical texts but encompass a wider array of linguistic data. The methodology behind these models is intriguing. They are trained on diverse datasets, covering a broad spectrum of topics and languages. This training strategy gives them a more holistic understanding of language, equipping them better to manage the variability and intricacy inherent in clinical texts.

Researchers from Kaduceo, Berliner Hochschule fur Technik, and German Heart Center Munich constructed a dataset based on ICD-10-CM code descriptions commonly used in US hospitals and their reformulated versions. The study under discussion provides a comprehensive analysis of the performance of these generalist models in clinical semantic search tasks. This dataset was then used to benchmark the performance of general and specialized embedding models in matching the reformulated text to the original descriptions.

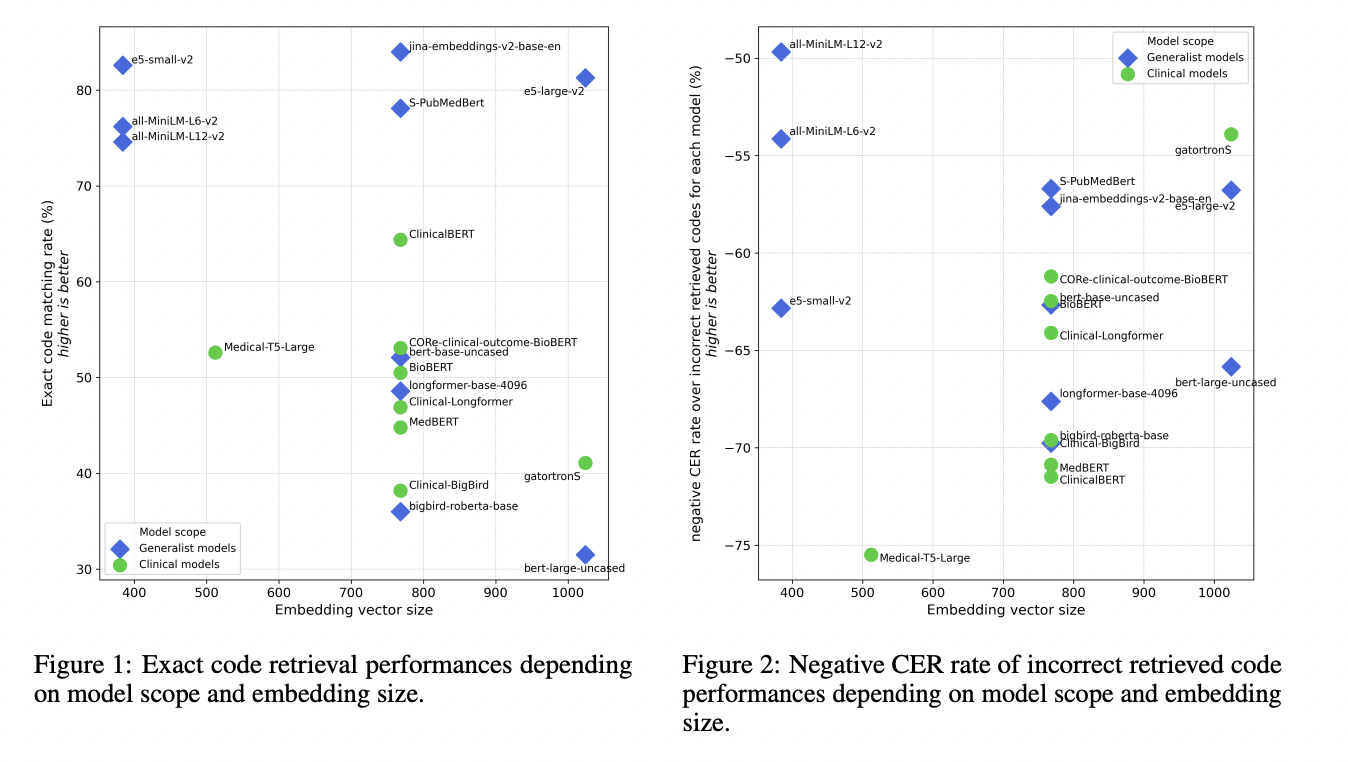

Generalist embedding models demonstrated a superior ability to handle short-context clinical semantic searches compared to their clinical counterparts. The research showed that the best-performing generalist model, the jina-embeddings-v2-base-en, had a significantly higher exact match rate than the top-performing clinical model, ClinicalBERT. This performance gap highlights the robustness of generalist models in understanding and accurately linking medical terminologies, even when faced with varied expressions.

This unexpected superiority of generalist models challenges the notion that specialized tools are inherently better suited for specific domains. A model trained on a broader range of data might be more advantageous in tasks like clinical semantic search. This finding is pivotal, underscoring the potential of using more versatile and adaptable AI tools in specialized fields such as healthcare.

In conclusion, the study marks a significant step in the evolution of medical informatics. It highlights the effectiveness of generalist embedding models in clinical semantic search, a domain traditionally dominated by specialized models. This shift in perspective could have far-reaching implications, paving the way for broader applications of AI in healthcare and beyond. The research contributes to our understanding of AI’s potential in medical contexts and opens doors to exploring the benefits of versatile AI tools in various specialized domains.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our 35k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Sana Hassan, a consulting intern at Marktechpost and dual-degree student at IIT Madras, is passionate about applying technology and AI to address real-world challenges. With a keen interest in solving practical problems, he brings a fresh perspective to the intersection of AI and real-life solutions.