Voyage AI Introduces Voyage-3 and Voyage-3-Lite: A New Generation of Small Embedding Models that Outperforms OpenAI v3 Large by 7.55%

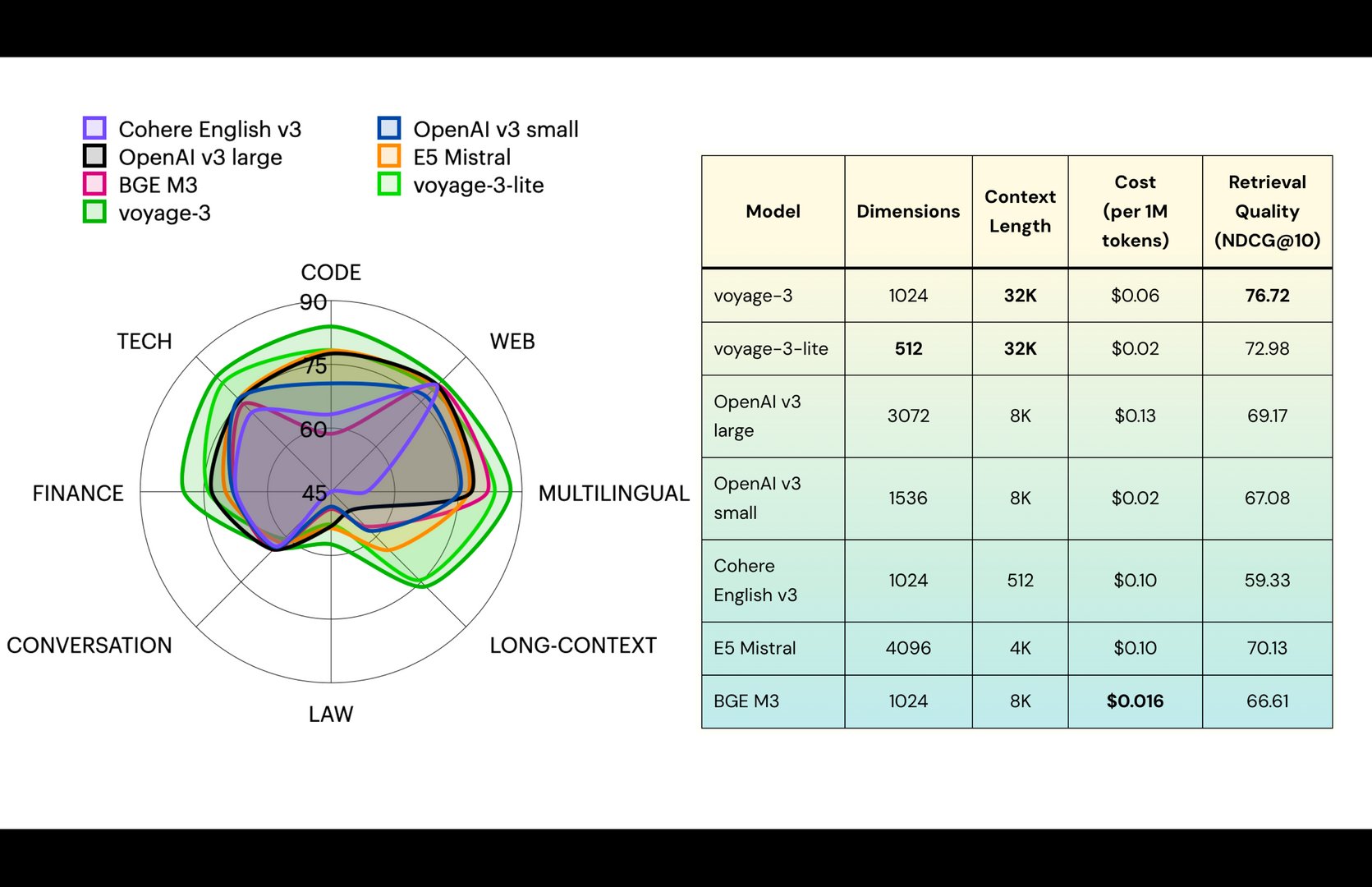

Voyage AI is proud to announce the release of its new generation of embedding models, Voyage-3 and Voyage-3-Lite. The Voyage-3 and Voyage-3-Lite models are designed to outperform existing industry standards in various domains, including technology, law, finance, multilingual applications, and long-context understanding. According to Voyage AI’s evaluations, Voyage-3 outperforms OpenAI’s V3 large model by an average of 7.55% across all tested domains, which include technical documentation, code, law, finance, web content, multilingual datasets, long documents, and conversational data. Moreover, Voyage-3 achieves this with 2.2 times lower costs and a 3x smaller embedding dimension, translating to significantly reduced vector database (vectorDB) costs. Similarly, Voyage-3-Lite offers 3.82% better retrieval accuracy than OpenAI’s V3 large model, with 6x lower costs and a 6x smaller embedding dimension.

Cost Efficiency Without Compromising Quality

Cost efficiency is at the heart of the new Voyage-3 series models. With a context length of 32,000 tokens, four times more than OpenAI’s offering, Voyage-3 is a cost-effective solution for businesses requiring high-quality retrieval without breaking the bank. For example, Voyage-3 costs $0.06 per million tokens, making it 1.6x cheaper than Cohere English V3 and substantially more affordable than OpenAI’s large V3 model. Also, Voyage-3’s smaller embedding dimension (1024 vs. OpenAI’s 3072) results in lower vectorDB costs, enabling companies to scale their applications efficiently.

Voyage-3-Lite, the model’s lighter variant, is optimized for low-latency operations. At $0.02 per million tokens, it is 6.5x cheaper than OpenAI’s V3 large model and has a 6-8x smaller embedding dimension (512 vs. OpenAI’s 3072). This makes Voyage-3-Lite a viable option for organizations looking to maintain high retrieval quality at a fraction of the cost.

Versatility Across Multiple Domains

The success of the Voyage-3 series models extends beyond general-purpose embeddings. Over the past nine months, Voyage AI has released a suite of its Voyage-2 series embedding models, including domain-specific models like Voyage-Large-2, Voyage-Code-2, Voyage-Law-2, Voyage-Finance-2, and Voyage-Multilingual-2. These models have been extensively trained on data from their respective domains, demonstrating exceptional performance in specialized use cases.

For example, Voyage-Multilingual-2 delivers superior retrieval quality in French, German, Japanese, Spanish, and Korean while maintaining best-in-class performance in English. These achievements testify to Voyage AI’s commitment to developing robust models tailored to specific business needs.

Technical Specifications and Innovations

Several research innovations underpin the development of Voyage-3 and Voyage-3-Lite. The models feature an improved architecture, leveraging distillation from larger models and pre-training on over 2 trillion high-quality tokens. Additionally, retrieval result alignment is refined through human feedback, further enhancing the accuracy and relevance of the models.

Key technical specifications of the Voyage-3 series models include:

Voyage-3:

- Dimensions: 1024

- Context Length: 32,000 tokens

- Cost: $0.06 per million tokens

- Retrieval Quality (NDCG@10): 76 (outperforms OpenAI’s V3 large by 7.55%)

Voyage-3-Lite:

- Dimensions: 512

- Context Length: 32,000 tokens

- Cost: $0.02 per million tokens

- Retrieval Quality (NDCG@10): 72 (outperforms OpenAI’s V3 large by 3.82%)

The models’ ability to handle a 32,000-token context length, compared to OpenAI’s 8,000 tokens and Cohere’s 512 tokens, makes them suitable for applications requiring comprehensive understanding and retrieval of large documents, such as technical manuals, academic papers, and legal case summaries.

Applications and Use Cases

The Voyage-3 series models cater to a wide range of industries, enabling applications in domains like:

- Technical Documentation: Providing accurate and context-aware retrieval from large technical manuals and programming guides.

- Code: It offers an enhanced understanding of code snippets, docstrings, and programming logic, making it ideal for software development and code review.

- Law: Supporting complex legal research by retrieving relevant court opinions, statutes, and legal arguments.

- Finance: Streamlining the retrieval of financial statements, SEC filings, and market analysis reports.

- Multilingual Applications: Facilitating multilingual search and retrieval across 26 languages, including French, German, Japanese, Spanish, and Korean.

Recommendations for Users

Voyage AI recommends that any general-purpose embedding users upgrade to Voyage-3 for enhanced retrieval quality at a low cost. Voyage-3-Lite offers an excellent balance between performance and affordability for those looking for further cost savings. Domain-specific use cases, such as code, law, and finance, can still benefit from Voyage-2 series models like Voyage-Code-2, Voyage-Law-2, and Voyage-Finance-2, although Voyage-3 provides highly competitive performance in these areas as well.

Future Developments

The Voyage AI team is continuously working to expand the capabilities of the Voyage-3 series models. In the coming weeks, the release of Voyage-3-Large is expected to set a new standard for large-scale general-purpose embeddings, further solidifying Voyage AI’s position as a leader in the field. For those interested in exploring the potential of the Voyage-3 series, the first 200 million tokens are free to try. Users can use these models immediately by specifying “voyage-3” or “voyage-3-lite” as the model parameter in Voyage API calls. Voyage AI’s release of Voyage-3 and Voyage-3-Lite represents a giant leap forward in embedding technology, offering a unique combination of high performance, low cost, and versatility. With these new models, Voyage AI continues to lead the way in creating state-of-the-art solutions for businesses and developers worldwide.

Check out the Models on Hugging Face and Details here. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter..

Don’t Forget to join our 50k+ ML SubReddit

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is committed to harnessing the potential of Artificial Intelligence for social good. His most recent endeavor is the launch of an Artificial Intelligence Media Platform, Marktechpost, which stands out for its in-depth coverage of machine learning and deep learning news that is both technically sound and easily understandable by a wide audience. The platform boasts of over 2 million monthly views, illustrating its popularity among audiences.