Amazon Researchers Present a Deep Learning Compiler for Training Consisting of Three Main Features- a Syncfree Optimizer, Compiler Caching, and Multi-Threaded Execution

One of the biggest challenges in Machine Learning has always been to train and use neural networks efficiently. A turning point was reached with the introduction of the transformer model architecture, which created new opportunities for gradient descent parallelization and distribution strategies, enabling the training of bigger, more intricate models on a wider scale. However, the exponential increase in these models’ sizes has brought up a number of issues with memory limitations and GPU availability. A significant issue is that a lot of models are now larger than the RAM that can be found on a single GPU. The enormous disparities in size between pre-trained language and vision models present another challenge. The idea of compilation is a potentially effective remedy that can balance the needs for computing efficiency and model size.

In recent research, a team of researchers has introduced a deep learning compiler specifically made for neural network training. With three essential components, i.e., multi-threaded execution, compiler caching, and a sync-free optimizer, their work has shown remarkable speedups over traditional approaches, such as native implementations and PyTorch’s XLA (Accelerated Linear Algebra) framework, for both common language and vision problems.

This deep learning compiler has been developed with a sync-free optimizer implementation. Optimizers play a crucial role in neural network training as they modify model parameters in order to minimize the loss function. Synchronization barriers are a common feature of traditional optimizers and can cause a bottleneck in distributed training. A sync-free optimizer, on the other hand, seeks to lessen or do away with the requirement for synchronization, enabling more effective parallelism and better use of computational resources. This function is especially helpful when training speed and resource efficiency are negatively impacted by synchronization.

Another important feature of this deep-learning compiler is compiler caching. Pre-compiled representations of certain neural network or computation graph components are stored and reused through the process of caching. It is inefficient to rebuild the entire network from scratch every time you train a model. By saving and reusing previously built components, compiler caching seeks to alleviate this inefficiency and can drastically cut down on training time. This feature efficiently conserves computing resources by utilizing the advantages of earlier compilation attempts.

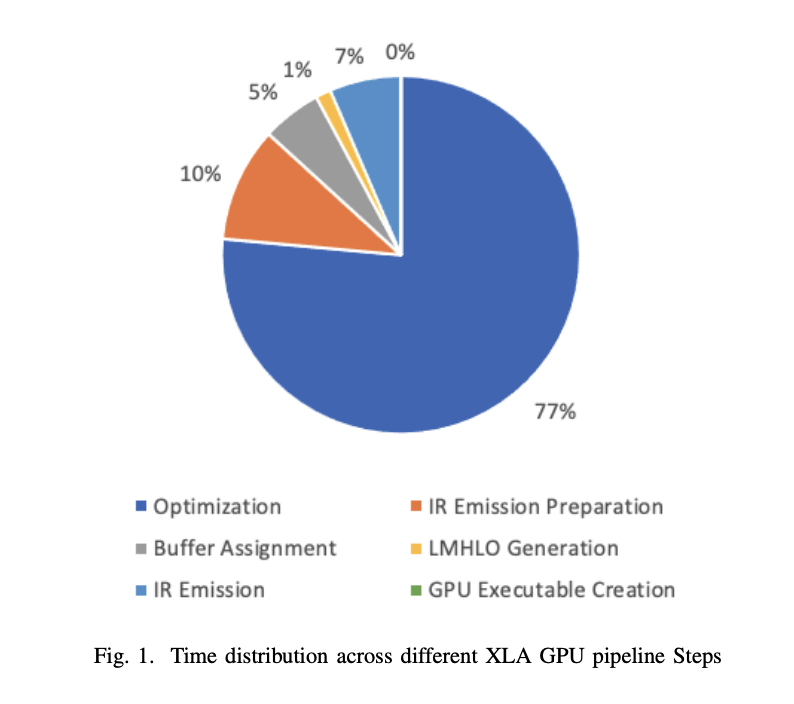

The third essential component is the multi-threaded execution. Neural network training frequently requires a large number of activities that can be parallelized. These operations can be completed concurrently on multi-core processors using multi-threading, which can result in significant speed increases. The compiler can speed up deep learning model training by optimizing the training procedure for multi-threaded execution, which allows it to utilize the hardware more effectively.

By contrasting their deep learning compiler with two well-established baselines, i.e., native implementations and the XLA framework inside the PyTorch deep learning framework, the team has illustrated the practical significance of these compiler characteristics. They have used these parallels to address prevalent issues in computer vision and natural language processing. When compared to these baseline methods, the results have demonstrated that their compiler can achieve a significant speedup and resource efficiency, highlighting the significance and promise of deep learning compilers in improving the effectiveness and practicality of neural network training for real-world applications.

In conclusion, this work is a major step forward in the field of deep learning and has the potential to speed up and optimize training procedures. These trials and findings of the research show the effectiveness of their changes to the PyTorch XLA compiler. These changes are extremely helpful for speeding up the training of neural network models across several domains and configurations.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on WhatsApp. Join our AI Channel on Whatsapp..

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.