Meet ScaleCrafter: Unlocking Ultra-High-Resolution Image Synthesis with Pre-trained Diffusion Models

The development of image synthesis techniques has experienced a notable upsurge in recent years, garnering major interest from the academic and industry worlds. Text-to-image generation models and Stable Diffusion (SD) are the most widely used developments in this field. Although these models have demonstrated remarkable abilities, they can only currently produce images with a maximum resolution of 1024 x 1024 pixels, which is insufficient to satisfy the requirements of high-resolution applications like advertising.

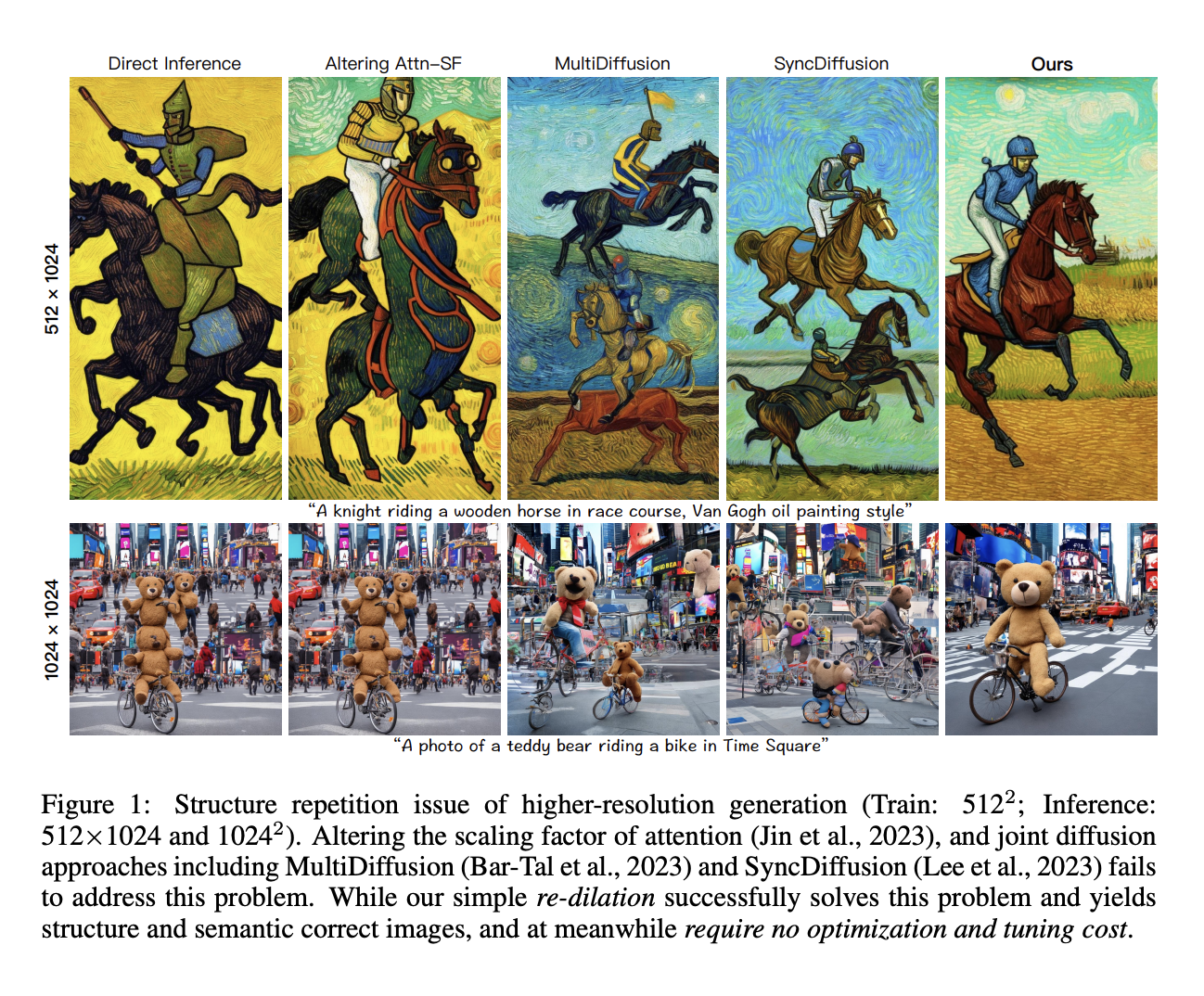

Problems develop when trying to generate images larger than these training resolutions, mostly with object repetition and deformed object architectures. Object duplication becomes more problematic as the image size increases if a Stable Diffusion model is used to generate images at dimensions of 512 × 512 or 1024 x 1024, having been trained on 512 x 512 images.

In the resulting graphics, these problems mostly show up as object duplication and incorrect object topologies. The existing methods for creating higher-resolution images, such as those based on joint-diffusion techniques and attention mechanisms, find it difficult to adequately address these issues. Researchers have examined the U-Net architecture’s structural elements in diffusion models by pinpointing a crucial element causing the problems, which is convolutional kernels’ constrained perceptual fields. Basically, issues like object recurrence arise because the model’s convolutional procedures are limited in their capacity to see and comprehend the content of the input images.

A team of researchers has proposed ScaleCrafter for higher-resolution visual generation at inference time. It uses re-dilation, a simple yet incredibly powerful solution that enables the models to handle greater resolutions and varying aspect ratios more effectively by dynamically adjusting the convolutional perceptual field throughout the picture production process. The model can enhance the coherence and quality of the generated images by dynamically adjusting the receptive field. The work presents two further advances: dispersed convolution and noise-damped classifier-free guidance. With this, the model can produce ultra-high-resolution photographs, up to 4096 by 4096 pixel dimensions. This method doesn’t require any extra training or optimization stages, making it a workable solution for high-resolution picture synthesis’s repetition and structural problems.

Comprehensive tests have been carried out for this study, which showed that the suggested method successfully addresses the object repetition issue and delivers cutting-edge results in producing images with higher resolution, especially excelling in displaying complex texture details. This work also sheds light on the possibility of using diffusion models that have already been trained on low-resolution images to generate high-resolution visuals without requiring a lot of retraining, which could guide future work in the field of ultra-high-resolution image and video synthesis.

The primary contributions have been summarized as follows.

- The team has found that rather than the number of attention tokens, the primary cause of object repetition is the convolutional procedures’ constrained receptive field.

- Based on these findings, the team has proposed a re-dilation approach that dynamically increases the convolutional receptive field while inference is underway, which tackles the root of the issue.

- Two innovative strategies have been presented: dispersed convolution and noise-damped classifier-free guidance, specifically meant to be used in creating ultra-high-resolution images.

- The method has been applied to a text-to-video model and has been comprehensively evaluated across a variety of diffusion models, including different iterations of Stable Diffusion. These tests include a wide range of aspect ratios and image resolutions, showcasing the model’s effectiveness in addressing the problem of object recurrence and improving high-resolution image synthesis.

Check out the Paper and Github. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 31k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

We are also on WhatsApp. Join our AI Channel on Whatsapp..

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.