This AI Research by Microsoft and Tsinghua University Introduces EvoPrompt: A Novel AI Framework for Automatic Discrete Prompt Optimization Connecting LLMs and Evolutionary Algorithms

Large language models (LLMs) are excelling at pretty much all NLP tasks. However, traditional fine-tuning methods are costly for LLMs, leading to the development of continuous prompt-tuning techniques that use trainable prompt embeddings without modifying LLM parameters. However, these methods still require access to LLM parameters and are not suitable for LLMs accessed via black-box APIs like GPT-3 and GPT-4.

This paper presents the following contributions:

- Introduction of EVOPROMPT: The authors introduce a novel framework, EVOPROMPT, for automating the optimization of discrete prompts. This framework connects Large Language Models (LLMs) with Evolutionary Algorithms (EAs) and offers several advantages:

- It doesn’t require access to LLM parameters or gradients.

- It effectively balances exploration and exploitation, leading to improved results.

- It generates prompts that are easily understandable by humans.

- Empirical Evidence: Through experiments conducted on nine different datasets, the paper provides empirical evidence showcasing the effectiveness of EVOPROMPT compared to existing methods. It demonstrates performance improvements of up to 14% in various tasks, such as sentiment classification, topic classification, subjectivity classification, simplification, and summarization.

- Release of Optimal Prompts: The authors make a valuable contribution by releasing the optimal prompts obtained through EVOPROMPT for common tasks. These prompts can be used by the research community and practitioners in tasks related to sentiment analysis, topic classification, subjectivity classification, simplification, and summarization.

- Innovative Use of LLMs: This paper pioneers the concept of using LLMs to implement evolutionary algorithms when provided with appropriate instructions. This novel approach broadens the potential applications of combining LLMs with traditional algorithms.

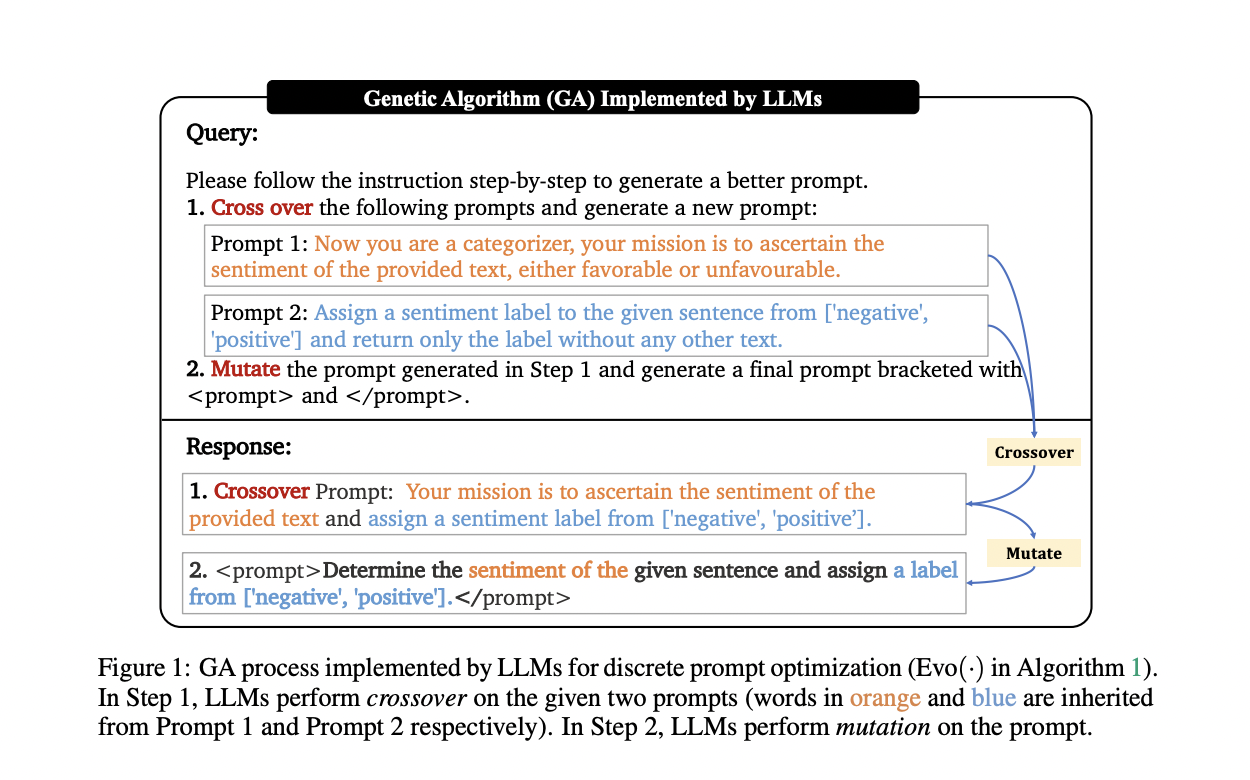

To put EVOPROMPT into practical use, it’s essential to pair it with a specific Evolutionary Algorithm (EA). There are various types of EAs available, and this paper focuses on two widely recognized algorithms: Genetic Algorithm (GA) and Differential Evolution (DE).

The above image demonstrates the GA process implemented by LLMs for discrete prompt optimization. Researchers believe that LLMs offer an effective and interpretable interface for implementing traditional algorithms, ensuring good alignment with human understanding and communication. The findings corroborate a recent trend where LLMs perform “Gradient Descent” in discrete space by collecting incorrectly predicted samples.

Additional research opportunities exist to investigate the full extent of Large Language Models’ (LLMs) capabilities in executing a diverse array of algorithms through interactions with humans using natural language instructions. Potential exploration ideas include whether LLMs can generate potential solutions in derivative-free algorithms, like Simulated Annealing.

Check out the Paper. All Credit For This Research Goes To the Researchers on This Project. Also, don’t forget to join our 30k+ ML SubReddit, 40k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

![]()

Janhavi Lande, is an Engineering Physics graduate from IIT Guwahati, class of 2023. She is an upcoming data scientist and has been working in the world of ml/ai research for the past two years. She is most fascinated by this ever changing world and its constant demand of humans to keep up with it. In her pastime she enjoys traveling, reading and writing poems.